Abstract

A lack of formal peer review training hinders the development of the next generation of peer reviewers. In 2018, the Genetics Society of America launched a formal program to help early career researchers improve their peer review skills in a live journal environment with direct feedback from editors. This article summarizes the history, operation, and some outcomes of the program.

It is no secret that peer review training is both varied and informal.1 A 2015 survey by Wiley2 showed that 35% of respondents obtained peer review training as advice from supervisors or colleagues, 32% from a journal’s instructions for reviewers, and 18% from the Committee on Publication Ethics (COPE)’s ethical guidelines. Publon’s 2018 Global State of Review found that 39.4% of survey respondents received no peer review training and that 80% believe more training would positively impact peer review.3

This lack of training is not indicative of a lack of interest in the peer review process. When asked, 77% of respondents indicated they would like to receive further reviewer training, and for respondents with 5 or fewer years of reviewing experience, the interest jumped to 89%.2 This finding is echoed in Sense about Science’s 2009 and 2019 surveys of peer review.4 COPE’s Ethical Guidelines for Peer Reviewers5 dedicates a section to training and mentoring that encourages early career researchers to take advantage of free tutorials available online, such as those provided by Publons or Sense about Science.

While online modules already available to peer reviewers provide a wealth of knowledge, few programs allow researchers to improve their peer review skills in a live journal environment and to receive direct feedback from editors. Addressing this gap in available resources was part of the impetus for the formation of the GENETICS Peer Reviewing Training Program. Now in its third year, the program has provided 200 Genetics Society of America (GSA) members, who are organized into cohorts by application cycle, with live peer review experience. In addition to receiving virtual training on the peer review process, early career researchers are able to actively engage with the process. This leads to the development of both critical thinking and scientific writing skills as they write reviews, observe experienced reviewers evaluating manuscripts, learn how editors synthesize reviews to arrive at a decision, and receive direct feedback on their own reviews from working editors.

Program History

GENETICS is GSA’s flagship journal; its notable history began in 1916, and the journal has grown and evolved to meet the changing needs of authors and readers for more than a century. GENETICS is organized into topical subsections based on subfields of genetics research. Each section is overseen by a Senior Editor, who evaluates papers on suitability for the journal and then assigns them to an Associate Editor to oversee the peer review process.

In response to the lack of formal training in the peer review process—especially training that comes with hands-on experience and professional feedback—the GSA Publications Committee, the GENETICS Editorial Board, and Sonia Hall (former Director of Engagement and Development at GSA) launched the GENETICS Peer Review Training Program.6

Work on the program began in 2017 with the development of training workflow and materials, reporting and benchmarking plans, and the initial application process. It also required investment in customizing workflows within the manuscript submission system to best support the vision of the program. This upfront investment of development hours and staff time allowed for automation of the review and feedback workflows—a crucial component that allows the program to run smoothly while reducing daily staff intervention.

Prior to the launch of the full program, a pilot was conducted in 3 of the journal’s 10 sections. This allowed time for troubleshooting and provided staff with information on how best to engage journal editors, since editor buy-in and participation are crucial to the success of the program. After the conclusion of the pilot program, GENETICS Editor in Chief Mark Johnston and journal staff conducted meetings with editors across the journal to introduce them to the program, educate them on the process, and address their questions and concerns.

Application Process

The GSA solicits applications on an annual basis, and eligible applicants are limited to GSA members who are senior graduate students up through junior faculty. Preference is given to applicants who have experience with peer review from the author’s point of view (particularly as first author), although this is not a requirement. All applicants are asked to state what they want to achieve through participation in the program in terms of both professional and career development.

Applications are reviewed by a committee comprising GSA journals staff, GENETICS editors, and past early career reviewers (ECRs). Because a past cohort rolls off in the same year that a new cohort starts, careful consideration is given to each section’s coverage needs and manuscript submission volume, as well as how many ECRs remain from the previous cohorts. Avoiding unbalanced ratios of ECRs to incoming manuscripts is a priority, as participants must have ample opportunities to review during their 2-year term. The application process is competitive—only 17% of applicants were accepted in the last cohort—but applicants are encouraged to re-apply and are given preference in future application cycles. Applicants who are not selected are also provided with feedback about their application and where they could improve.

Introduction to Peer Review

Upon acceptance to the program, participants form a new cohort that completes, as a group, approximately 5 hours of virtual training through 2 web conferencing sessions led by journal staff, GENETICS editors, and past program participants. The first session outlines the program policies and expectations, discusses the principles and ethics of peer review, and closes with an open discussion led by past ECRs about their experiences in the program. The second session looks at the journey of a manuscript at GENETICS, best practices for peer review, user experience in the manuscript submission system, and closes with a practice review session led by GENETICS editors.

The original introductory programming was substantially longer, but participant feedback led to the transfer of some content to the program training manual to be reviewed independently by participants prior to web conferencing sessions. The practice review, for example, is now completed by ECRs ahead of the second session and is provided to editors in advance for individual feedback. This frees up time in the sessions to discuss the reviewing experience, including the challenges that participants faced while completing the review.

Hands-on Experience in Peer Review

After successful completion of the 2 introductory sessions, program participants are assigned to a section of GENETICS based on their expertise, and some participants are cross-listed in sections to increase their chances of encountering manuscripts that fit their specialties. Participants receive review invitations for all initial submissions sent to their assigned section(s); this happens automatically within the submission system and does not require action from the Associate Editor or staff. Once one ECR agrees to review a manuscript, the other ECRs who have been invited to review that manuscript are notified that they are not needed. To address concerns about fairness, especially given differences in time zones, any ECR who has already reviewed for GENETICS must wait 72 hours after the review request to accept. Additionally, no ECR may review more than one manuscript at a time without staff permission.

After submitting their feedback, the ECR receives a copy of the other reviews, as well as the decision letter that is sent to authors. Associate Editors are asked to provide feedback through an automated form, which gives the editor checkbox prompts and open-ended questions to evaluate the ECR’s review (Table 1). Out of all the training offered in the program, participants found this approach to be the most useful for identifying areas for improvement and validating their hard work and progress.

Table 1. Checkboxes and questions for editor feedback form.

| Checkboxes—Overall Quality | Checkboxes—Scientific Rigor | Textboxes—Feedback RE: |

| The review was clearly written | The review effectively highlighted key issues with the manuscript | The review effectively highlighted key issues with the manuscript |

| Tone of the review is professional and polite | Evaluation of the scientific rigor was an appropriate level of detail | Reviewer’s determination of whether this manuscript is a good fit for the journal |

| The review revealed a strong grasp of the material | Scientific comments provided by the reviewer were accurate | Comments for this reviewer that could help them improve the quality of their future reviews |

| The review was an appropriate length | Additional proposed experiments would add significantly to the quality of the manuscript | |

| The reviewer had sufficient scientific expertise to provide an appropriate review | Additional proposed experiments are feasible within a reasonable timeframe | |

| Use of jargon is sufficiently minimal |

Program Metrics

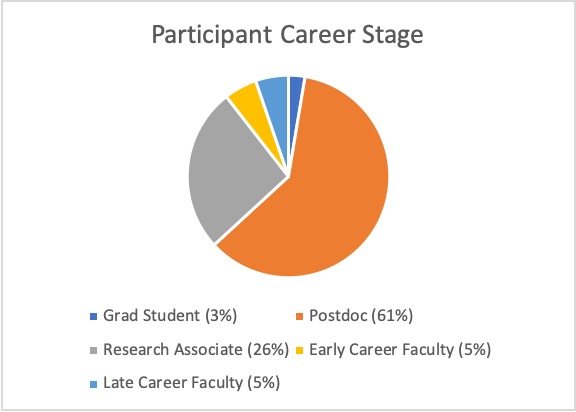

Participants who apply and are accepted into the program come from many career stages; however, our last cohort comprised largely Postdocs (61%), followed by Research Associates (26%) (Figure 1). Research Associates are generally experienced Postdocs who have gained a more permanent position within a lab.

Much of the work published in GENETICS deals with model organism systems (such as mouse, Drosophila, yeast, etc.), and model organisms are diversely represented in both applicants and participants. Some high-demand topical areas, such as gene expression, continue to draw many qualified ECRs.

Looking at the demographics of the applicants and participants themselves, the latest cohort was equally balanced in terms of gender—and though approximately 17% of GSA members hailed from outside the United States in 2020, the latest cohort in the Early Career Training Program represented a slightly more international group (27%). However, increasing racial and ethnic diversity among the candidates who apply and are accepted is an ongoing area for improvement. Future outreach is planned to recruit more potential applicants from non-R1 institutions (institutions that have very high research activity), as well as from historically Black colleges and universities.

Early career reviewers in the program have completed over 468 reviews, which is a completion rate of 93%. On average, ECRs complete reviews more quickly than traditional reviewers, in 12.71 days vs. 22 days, respectively. Editors note this speed and commitment by ECRs, and it is not uncommon for editors to reach out to ECRs to re-review resubmissions. Overall, editor feedback to participants is overwhelmingly positive. Editors complete 59% of the requested feedback forms for participants, often providing detailed feedback and statements of praise or encouragement that go beyond simply critiquing the review (Table 2).

Table 2. Editor feedback examples.

|

Feedback was also solicited from ECRs throughout the program, and their responses highlight the success of the overall program design: 95% of participants indicated that the overall experience was valuable, and 86% expressed that editor feedback helped them to see the strengths of their review. A similar number reported that reading the other reviews helped them pinpoint the strengths and/or weaknesses of their review.

Besides increasing their understanding and awareness of the science in their field, participants reported developing and strengthening many “soft” skills, such as determining when to seek advice and effective time management.

Participants also indicated that the program experience has impacted their confidence in reviewing manuscripts, because they now have a better understanding of how to frame and effectively communicate their feedback in a constructive manner. Many indicated that as they continued to review, they learned to be more critical and selective in accepting manuscripts to review, that they needed to start their reviews sooner, and that they learned to refine requests for additional experiments and look at the experimental methods more closely.

Program Challenges

It is important for publishers and societies who wish to launch a peer review training program to consider both current and ongoing resource availability. A substantial amount of time was needed to plan and launch the program, but even now—2 years from the initial launch—regular staff involvement is required to manage various aspects of the program, including yearly application review, training/onboarding of new cohorts, and day-to-day tasks, such as inbox management.

Editor engagement has been an ongoing challenge of the program; despite the work done to inform editors of the program launch and the related workflow changes in the manuscript submission system, staff could not prevent all cases of potential confusion or miscommunication. But the time that staff took to closely work with editors and hear their feedback allowed for continual improvement of the resources that were provided to editors, as well as of the program itself. One of the most substantial changes resulted from an editor’s desire to know more about the expertise of the ECRs in their section—the GENETICS website now features a dedicated page to introduce each ECR to the GSA community.7

Additionally, real-world experience is necessarily constrained by real-world submissions. The program’s goal is for each ECR to review at least 1 paper that fits their expertise while they are in the program; however, if no appropriate paper is submitted during that time, the affected participants will have their terms extended in order to afford them more opportunities to gain experience with manuscripts that are directly relevant to their expertise.

Finally, there is a concern that those who complete the program might be underutilized because they might appear to editors to be too junior to serve as a regular reviewer or editorial board member. To address this, all participants rotate into the GENETICS reviewer pool after completion of the program and tagging within the manuscript processing system clearly labels them as ECR alumni, communicating to editors that they have the experience necessary to provide solid reviews. Our hope is that the program design—2 years of reviewing accompanied by editor feedback—leads to editors recognizing these ECRs and thus returning to them as regular reviewers post-program completion. Currently, we see that editors do invite ECRs to re-review resubmissions—and also invite them personally to review new submissions. Additionally, some participants have gone on to author accepted submissions at the journal.

Future Considerations

Though the GENETICS Peer Review Training Program has undoubtedly created new opportunities for early career researchers, staff will be implementing new reporting to measure long- and short-term program outcomes. We want to evaluate who is best served by the current offerings in terms of topical expertise and participant demographics—and who we still need to reach. Overall, our goal is to be able to speak to how the program is furthering the mission of the society to help mentor and thus include a more diverse subset of the community we serve.

The ongoing pandemic has forced staff to re-evaluate our previous assumptions, from how staff resources are allocated to how we address potential editor fatigue and availability in our volunteer base of Associate Editors. Plans are underway to further automate and/or streamline program workflows to continue to minimize regular staff intervention and ease any burdens on editors. And as institutions in higher education continue to feel the effects of shrinking budgets and limited time in the lab, will we see a decrease in program interest from early career researchers as some simply do not have the bandwidth to invest in career development or even begin seeking career opportunities outside of academia?

Many unknowns await us, but we hope that by continuing to seek input from all stakeholders and improving our own reporting capabilities, we will be better positioned to enhance the program and provide what our early career researchers tell us they need—ongoing training and support as they establish themselves in their careers and contribute to the broad community of genetics and genomics research.

References and Links

- Johnston M. Learning to peer review. Genes to Genomes. 2017. [accessed October 19, 2020]. http://genestogenomes.org/learning-to-peer-review/

- Warne V. Rewarding reviewers – sense or sensibility? A Wiley study explained. Learned Publ. 2016;29(1):41–50. https://doi.org/10.1002/leap.1002

- Publons. Global state of peer review 2018. 2018. https://doi.org/10.14322/publons.GSPR2018

- Elsevier, Sense about Science. Quality, trust & peer review: researchers’ perspectives 10 years on. 2019. [accessed October 19, 2020]. http://wordpress-398250-1278369.cloudwaysapps.com/wp-content/uploads/2019/09/Quality-trust-peer-review.pdf

- Committee on Publication Ethics, COPE Council. Ethical guidelines for peer reviewers. 2017. [accessed October 19, 2020]. https://publicationethics.org/files/Ethical_Guidelines_For_Peer_Reviewers_2.pdf

- GSA. GENETICS Peer Review Training Program. [accessed October 19, 2020]. https://genetics-gsa.org/career-development/genetics-peer-review-training-program/

- GENETICS. Early Career Reviewers. 2019. [accessed October 19, 2020]. https://www.genetics.org/content/early-career-reviewers

Ruth A Isaacson, MA, is Managing Editor and Sarah N Bay, PhD, is Scientific Editor and Program Manager at Genetics Society of America, Bethesda, MD; Megan M McCarty, MA, is Client Manager and Systems Support Expert, J&J Editorial, LLC, Cary, NC.