MODERATOR:

Tamara Hanna

American Chemical Society

Washington, DC

SPEAKERS:

Sonja Krane

American Chemical Society

Washington, DC

Matthew Hayes

Clarivate Analytics

Philadelphia, Pennsylvania

Erika Pastrana

Springer Nature

New York, New York

REPORTER:

Samantha Bruno Fuller

American Association for the Advancement of Science

Washington, DC

Peer review is a constantly evolving and vital aspect of scientific publication. Journals rely on editors and reviewers to volunteer their time to ensure that quality, well-vetted research is published. With such a large ask, the industry is regularly improving upon and inventing new tools to aid editors and reviewers. This session, “Improving Peer Review One Case Study at a Time,” highlights three case studies that show promising innovation that is working to reach this goal.

Matthew Hayes, Director of Publons, began the session discussing transparent peer review, which allows for more visibility of the process and recognition of reviewers. Due to the growing interest amongst reviewers and publishers to adopt this type of model, a transparent peer review model in partnership with ScholarOne was created, which fits within the established workflows and systems of the journal. Hayes also highlighted that the difference between “transparent” and “open” peer review is that the transparent model allows reviewers to choose to have their review published but remain anonymous.

With this model, the submission system collects peer review content, along with author and reviewer options, and sends it to Publons. The publisher sends a feed of the accepted articles to Publons, which then creates the article and peer reivew pages, registering the peer review content with a DOI. After the article publishes, the peer review content is triggered to publish on Publons. The Publons badge appears on both pages, linking the published article with the published peer review content. If the reviewers have chosen to reveal their identity and have a profile with Publons, readers will also be able to access their profiles.

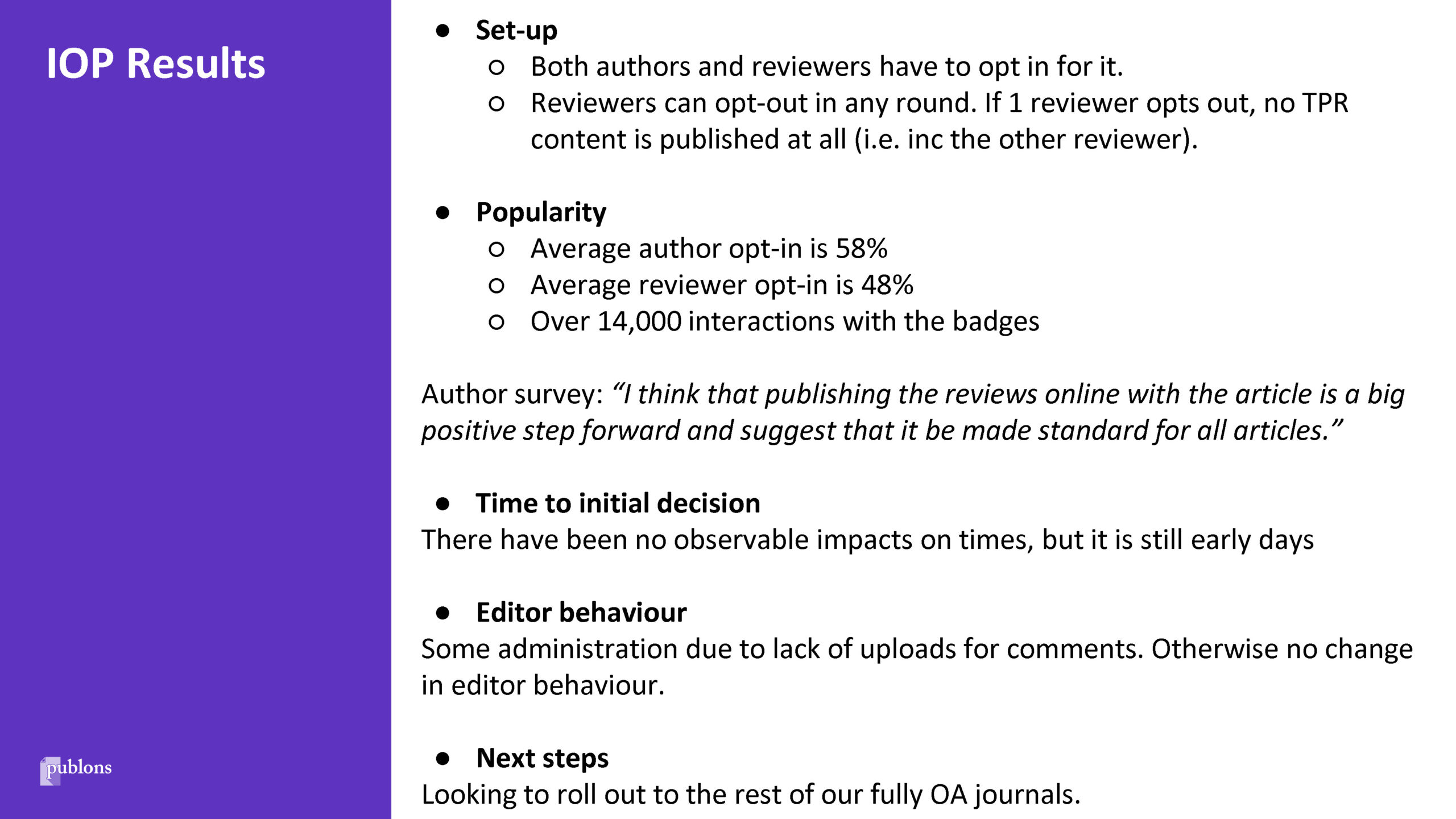

During the presentation, Hayes invited Simon Harris, Managing Editor at IOP Publishing, to present the results of their case study with this model (Figure 1).

Given the success of this model, IOP Publishing is looking to expand it to their other Open Access journals, and Publons is looking to partner with other publishers. The goal of this project is to focus more on the community aspect of peer review.

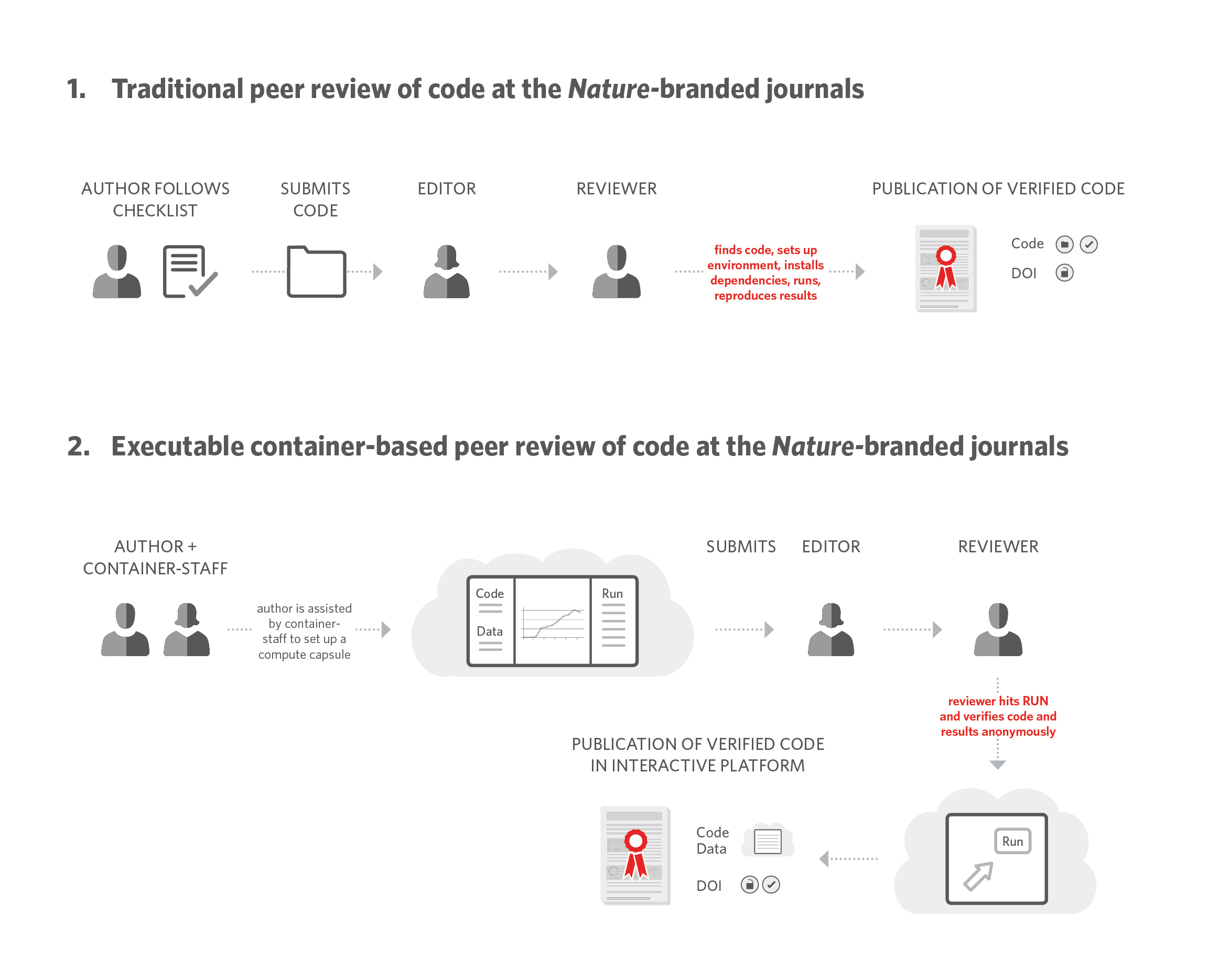

Erika Pastrana, Editorial Director at Nature Journals, gave the second presentation on integrating code publication and peer review. Code is becoming more and more essential to research papers, and thus an important part that should be reviewed. Properly documenting, reviewing, and sharing code was the goal of this case study.

Asking editors and reviewers to review code through the traditional review process can be very cumbersome and time consuming. Utilizing a container platform that hosts the code, data, and all the necessary environments allows for running the code on the cloud in order to reproduce the results. This offers advantages to authors, reviewers, and readers. Springer Nature partnered with Code Ocean to create this platform. Authors were given the option to opt into this pilot at submission. If they opted in, they set up a Code Ocean capsule (code container), and the link to this capsule was shared privately with the editors and reviewers. If the paper was eventually accepted, the readers would also gain access to this capsule, which was given its own DOI for proper recognition, citation, and code re-use (Figure 2).

Sonja Krane, Associate Publisher at the American Chemical Society (ACS), concluded the session with her presentation on AI-assisted tools. In order to help counteract reviewer fatigue, they created a stand-alone tool to help recommend appropriate reviewers in their database to the editors based on reviewing and publication history. The goal was to identify expert and reliable reviewers to avoid increasing the number of invitations sent to unreliable or unresponsive reviewers. A small-scale pilot study with about a dozen editors is currently underway.

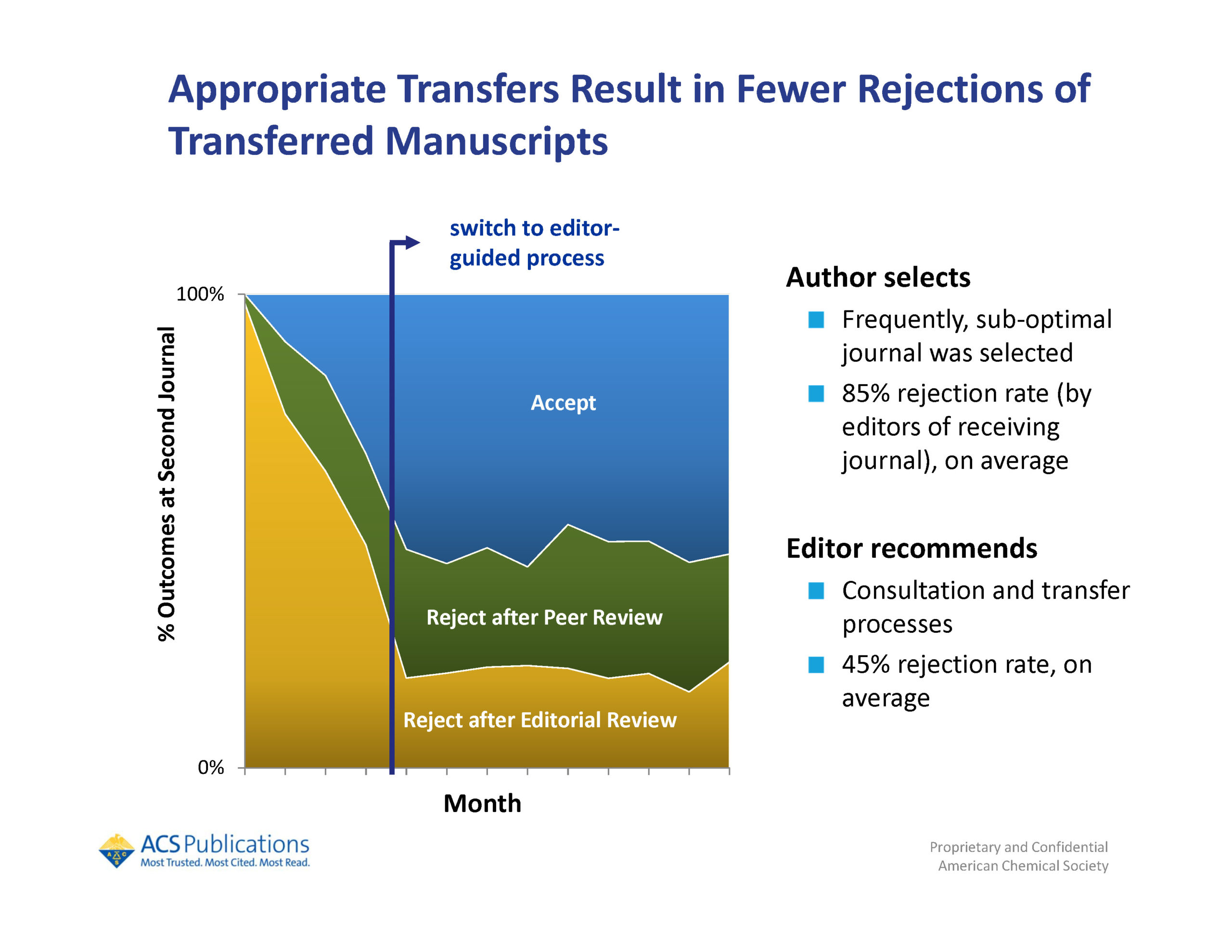

Another area where an AI tool can be of use is with manuscript transfer. 75% of rejected ACS papers were eventually published in non-ACS journals. As a publisher, it is ideal to guide authors to transfer their papers to another journal within the publisher family, and authors have an interest in transferring rather than resubmitting elsewhere. For this case study, they initially asked authors to choose where their papers should be transferred but authors did not choose the most appropriate journals. They then utilized the stand-alone AI tool to help editors choose which journals the papers should transfer to if they were rejected (Figure 3).

This worked well and papers given a reject with transfer option were much more likely to be accepted at the second journal. Additionally, during an author survey they discovered that authors who were given a reject decision with transfer option were more satisfied than authors given a reject decision with no option to transfer. These AI tools have proved useful and the hope is to be able to fully integrate them with their submission system, ScholarOne, in the future.

The “Improving Peer Review One Case Study at a Time” session at this year’s first virtual annual meeting showcased a wide range of models and tools that can make the peer review process more complete and transparent, while less cumbersome and time-consuming. All three presenters demonstrated great promise with their case studies, and it will be interesting to see where these innovations take peer review in the near future.

References and Links

- Pastrana E, Kousta S, Swaminathan S. Three approaches to support reproducible research. Sci Ed. 2019;42:77–82. [accessed May 26, 2020]. https://www.csescienceeditor.org/article/three-approaches-to-support-reproducible-research/.