Evaluating all players on the journal side of the peer-review process— editors, reviewers, editorial board members, and staff members—can provide benchmarks that may lead to improved journal performance. But which metrics do we look at and what numbers do we crunch? This panel of experts shared their experiences and offered practical suggestions about evaluation methods they are using to maintain their competitive edge.

Evaluating Reviewers

A recent author survey done for The Journal of Bone and Joint Surgery, Inc,* which publishes four journals, highlighted challenges shared by journals in many different fields: Authors were concerned about the quality of reviews and the length of the review process. The editor-in-chief decided to “review the reviewers” and recognize those who received high rankings.

Authors and reviewers are “our customer base,” said Marc F. Swiontkowski, editor-in-chief of The Journal of Bone & Joint Surgery (JBJS), a biweekly orthopedic journal that receives about 1,700 submissions each year and that once counted about 1,500 reviewers in its database but now is down to 1,300. “We want reviewers who do an excellent job and [who] do it without delay.”

The journal had long used a reviewer grading system to weed out substandard reviewers, but, in 2014, began an extensive project to pare down its database, said Christina Nelson, JBJS peer review manager.

Christina Nelson

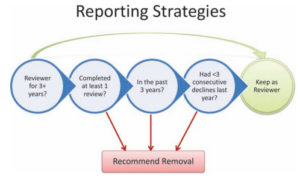

They removed any reviewer who had registered and never completed a review, hadn’t done a review in three years, or declined to review three or more times in the past year, she said (see Figure). They kept people who had registered recently but hadn’t yet had a chance to complete a review. Ultimately, they want to reduce the reviewer database to 1,000 registrants.

Evaluating Editors

The American Heart Association (AHA), which publishes 12 journals that as a group received 20,000 submissions in 2014, had evaluated its editors by looking at standard metrics such as time to first decision and acceptance rate. But simply looking at quantitative data wasn’t resulting in a meaningful evaluation, said Jonathan Schultz, managing editor of Circulation Research. The AHA surveyed its editors to learn what would work better and discovered that editors thought the metrics being tracked were only indirect measures of a journal’s quality and that many were out of their control.

As a result, the AHA revamped its editor evaluation process to include more qualitative measures. “They felt successful journals support the society’s mission, publish important articles and reach a large audience in a meaningful way. And successful editors have a vision and experience and they know how to curate the best collection of work,” he said.

Because qualitative goals can be harder to track, the society developed a new evaluation template that focuses on strategic goals over a three-year period. Schultz shared the template in his slides, which are also available online.

Evaluating Editorial Board Members

The Proceedings of the National Academy of Sciences (PNAS) receives 17,000 submissions each year and has an acceptance rate of about 18%. The editorial board members, who number 213 and act as gatekeepers, must maintain a reject-without-review rate of at least 50% so that the journal system isn’t overloaded, said Etta Kavanagh, the journal’s editorial manager.

To track rejection rates for individuals and the board as a whole, PNAS created reports in its online peer-review system to pull data each month. She shared examples of the tracking spreadsheets in her slides, which are also available at the URL stated above.

PNAS shares the data each month so that board members can see the big picture as well as where they stand individually; board members are listed by name in the report. Since they began sharing the data five years ago, the reject-withoutreview rate has increased from 49% to 55%, Kavanagh said.

Evaluating Staff Members

Tracking performance data gives managers and employees a treasure trove of precise information about productivity. At KWF Editorial Services, staff members track their work on specific tasks in a timemanagement system that can aggregate the data, which then can be used for different purposes, said Alexis Wynne Mogul, a senior managing editor.

The reports allow managers to determine if employees are meeting productivity benchmarks and to provide more training if needed. They also allow the company to supply clients with detailed monthly reports about the work accomplished. The data, displayed in spreadsheets as well as in charts and graphs, can shine a light on workflow bottlenecks and lead to improved efficiency, she said.

*A style note for this section from Christina Nelson: Technically the company should be called “The Journal of Bone and Joint Surgery, Inc.” The journal itself is called The Journal of Bone & Joint Surgery (yes, the ampersand makes a difference).