Observations and predictions based on two rounds of surveys among primarily Chinese-speaking researchers conducted in early and late 2023.

A Tumultuous 2 Years

The increasing availability of generative artificial intelligence (AI)-based tools such as ChatGPT for writing and editing, among other academic tasks, has prompted considerable debate among researchers, universities, publishers, journals, and other stakeholders over the boundaries separating the ethical and unethical use of such tools.

Some, such as the Science family of journals and the University of Hong Kong, initially imposed strict restrictions on the use of ChatGPT and other AI tools, both deeming the use of AI-generated text as plagiarism.1,2 These restrictions have since been relaxed,3-5 reflecting a general movement in academia, both in education and research, from outright bans to embracing productive and ethical use.

Authors for whom English is not a first language (hereafter, multilingual authors) face greater barriers to publication than their native English-speaking counterparts.6,7 Multilingual authors have traditionally relied on editing or translation services to ensure their manuscripts meet journals’ requirements for high standards of English. However, not all can afford professional language services, and their use increases the costs associated with publishing. Generative AI tools can be a game-changer for multilingual researchers, bringing much-needed equity to academic publishing and eliminating English fluency as a barrier to research dissemination.8

As managers at a company providing (100% human) academic editing services, we were interested in how the authors we work with, the majority of whom are multilingual, perceived the recent developments in AI and how their perceptions have evolved over the last year. Therefore, we ran 2 rounds of online surveys in early and late 2023, 6 and 12 months after the public launch of ChatGPT 3.5, respectively.

Here, we present data from these surveys and share insights into the evolution of attitudes toward AI use for writing and editing among primarily Chinese-speaking multilingual authors. Combining respondent data with insights from our experimentation with AI tools, we also present our views on what the future holds for multilingual authors as well as editing professionals.

Survey and Respondent Details

A self-developed 16-question online survey, written in English, was conducted in May 2023 (T1) and November 2023 (T2). We invited authors, primarily multilingual and either current or prospective clients of AsiaEdit (an academic language solutions company offering editing and translation services with a focus on Chinese-speaking regions, including mainland China, Hong Kong, and Taiwan) to participate in the survey by email and social media posts. Participation was voluntary and anonymous.

The surveys were completed by 245 respondents (T1, n = 84; T2, n = 161). Questions on the specifics of the use (non-use) of AI tools were only shown to respondents who reported they had (had not) used such tools (Figure 1). In return for their participation, the respondents were offered discount coupons redeemable for AsiaEdit services. The samples were not matched between the 2 survey rounds.

Survey Results and Our Interpretation

Awareness and Use of AI Tools for Writing and Editing

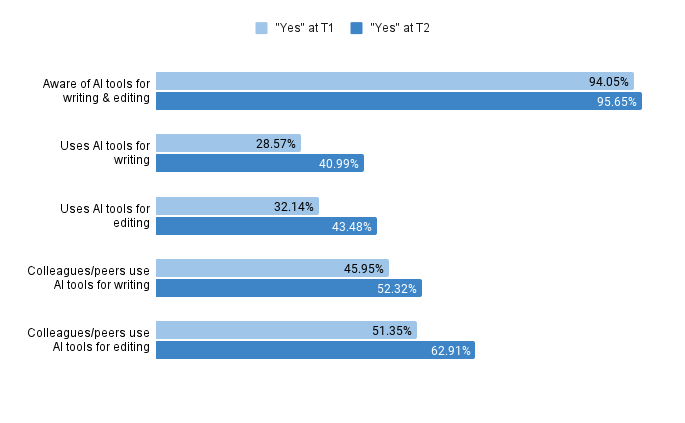

At both T1 and T2, awareness of AI tools for writing and editing (hereafter “AI tools” for brevity) was very high, with 94% and 96% of respondents reporting some level of awareness, respectively.

Attitudes toward AI use in academic writing and editing have generally relaxed over the past year, with more respondents using and/or having a positive outlook toward such tools at T2. Specifically, over the 6-month period between the surveys, the percentage of respondents who had used AI-based tools for writing research manuscripts at least once increased substantially from 29% to 41%, with the corresponding percentage for editing research manuscripts increasing from 32% to 43%. These statistics are supported by respondents’ open-ended comments, with one stating, “In my research field, I’ve found that AI is helpful for English grammar, choosing appropriate words, sentence expression, etc.”

We also asked all respondents whether they were aware of their colleagues and peers using AI tools. Consistent with the evidence of increased AI use among the respondents themselves, the T2 survey revealed increases of 7% and 11% in the use of AI by the respondents’ colleagues/peers for writing and editing, respectively.

Attitudes Among AI Users

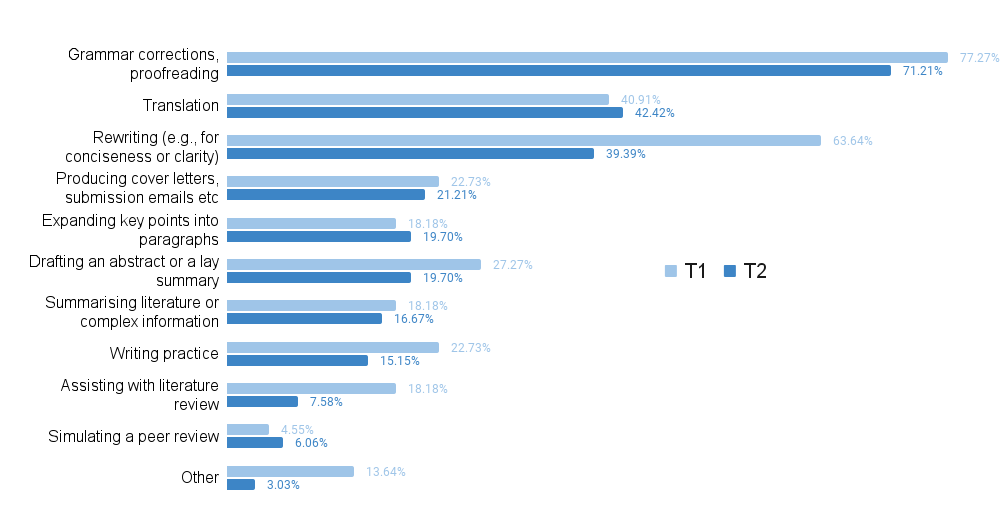

The subset of respondents who reported AI use (T1, n = 22; T2, n = 66) were asked to provide more detailed information about the specific tools they used and for which tasks they used them (Figure 2).

In terms of the AI tools used, at T1, ChatGPT was the most popular, used by 73% of AI users in our sample, followed by Grammarly at 55%. At T2, the corresponding percentages were 85% and 62%, indicating the increasing popularity of ChatGPT. This is in contrast to the findings of a survey of around 700 respondents across 82 countries by De Gruyter,9,10 which found that “Only [a] few scholars are using ChatGPT/GPT-4 regularly for their work.” However, our trend is more in line with a study of more than 6,000 German university students, in which 49% of the sample reported using ChatGPT/GPT-4.11

At both T1 and T2, the top reported use cases for AI tools were grammar corrections/proofreading, rewriting, translation, and drafting abstracts/lay summaries. These dominant use cases are consistent with those reported in other surveys and studies. For example, the De Gruyter survey reported that “the most popular AI tools among scholars are focused on language support,”9 and the German study reported “text creation” and translation as top use cases across several research fields.11

Surprisingly, our results show that the use of AI for editing, proofreading, and rewriting tasks declined between T1 and T2, despite the overall increase in the use of AI. Perhaps authors are finding, similar to our own experience, that while AI can produce near-perfect text in terms of grammar and spelling, it can actually increase the overall workload because of the additional need for fact checking and verification.

Overall, AI tools appear to be supporting a niche in academia currently served by professionals such as editors and translators, or those with excellent language skills in a research or peer group. These findings lend further credence to the claim, or hope, that AI tools could bring more equity to academia by partially reducing the cost and time of overcoming language barriers. “Very positive. They are equalizers,” quipped one respondent when asked about their AI outlook.

Attitudes Among AI Non-users

Questions specific to the non-use of AI tools were shown only to respondents who did not report using AI tools for editing or writing.

Among this non-user segment, rather surprisingly, the percentage of respondents who planned to use AI-based tools for writing or editing in the future dropped from 53% to 40% between May and December 2023. Given the statistics indicating increased AI adoption overall, this perhaps indicates that those who initially adopted a wait-and-see approach had started using AI by the latter half of 2023 (“Not now – but not ‘never’”9), while those with a stronger objection to AI use appear to remain unswayed.

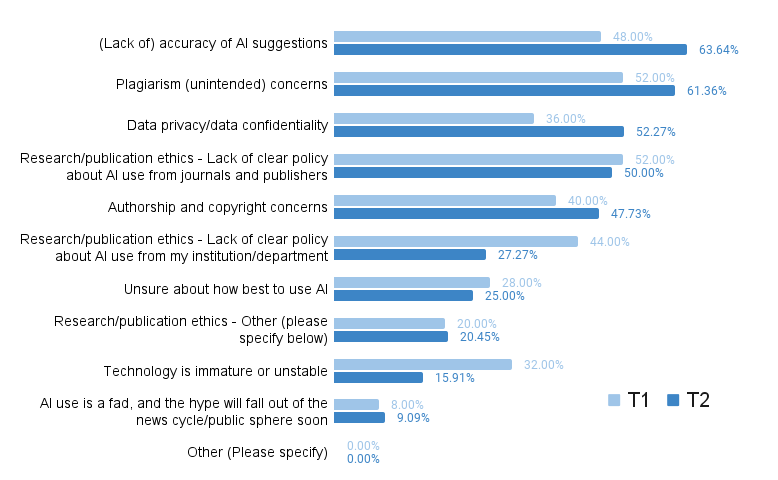

While both survey rounds indicated (lack of) trust and (potential) ethical issues as the top broad reasons for AI non-use, again consistent with the De Gruyter survey,9,10 the specific reasons have evolved between the 2 rounds (see Figure 3). At T1, about 50% of AI non-users were concerned about unintended plagiarism arising from the use of generated content (52%), the lack of clear policies about AI use from journals and publishers (52%), and the inaccuracy of AI suggestions (48%).

Concerns about inaccuracy (48% → 64%), plagiarism (52% → 61%), and lack of clarity from journals/publishers (52% → 50%) carried over into November, although the slight decrease in the latter suggests that journals and publishers may have started to provide clearer guidelines. Similarly, the guidelines and press releases issued by Hong Kong universities between May and November3,5,12-14 seem to have allayed a major concern raised by non-users at T1: “Research/publication ethics – Lack of clear policy about AI use from my institution/department” (44% → 27%).

In the meantime, authors seem to have become much more aware of the potential privacy and data confidentiality risks (36% → 52%), perhaps due to the flurry of OpenAI data breach news reports released between the 2 rounds.15-17

Disclosure Beliefs

Both AI users and non-users were asked whether the use of AI for writing or editing needs to be mandatorily disclosed. In both rounds, nearly two-thirds of respondents urged disclosure for writing, while only half considered disclosures necessary for editing. Clearly, authors see writing and editing as distinct contributions to the publishing process, probably because writing is more closely associated with “original ideas” than editing.

In contrast, journals, publishers, and professional associations who have produced guidelines on AI use and disclosure largely do not make this writing vs. editing distinction. Several guidelines use all-encompassing terms, such as “using LLM tools” (Nature18) “usage of AI tools” (Council of Science Editors19), and “production of submitted work” (International Committee of Medical Journal Editors20), while others explicitly refer only to writing. NEJM AI21 is a positive outlier in that it analogizes AI use disclosure to disclosure of third-party copyediting services. It is possible that journals and publishers view copyediting—especially if done by the authors themselves using AI tools—as part of the writing process, whereas (multilingual) authors do not.

Some open-ended responses further indicate that authors see the use of AI as akin to that of other software and tools used in research and publishing:

- “AI tools are just tools, similar to word processors and Google Scholar that authors are using extensively.”

- “It is here to stay. We need to embrace and find ways for it to be universally accepted in both the teaching and research fields. It is a very powerful tool but only that!”

What the Future Holds…

At T2, a larger proportion of respondents (11% increase) endorsed the statement that AI writing tools will become “an essential tool that all writers will use,” reflecting the overall theme of increased adoption of and positive outlook toward AI tools. Moreover, when asked about respondents’ favorability toward a “hybrid” editing service, in which a human editor would review the output of an AI editing tool, resulting in lower editing fees and no compromise on quality or data security, 50% of respondents responded favorably in both surveys, with about 34% as yet undecided.

Our opinion is that these results are driven primarily by potential cost savings for authors. “Human editing is financially unviable for adjunct faculty, so an AI-Human hybrid may help,” commented a respondent at T2. Our internal business data also suggest that 50% of clients opt for the least expensive editing option (i.e., the slowest return option).

Given everything discussed thus far, we expect the use of AI writing and editing tools to become increasingly normalized over the coming years, with the effectiveness and sophistication of the underlying technologies, legal clarity (e.g., on copyright), policy clarity (e.g., from publishers, journals, and universities), and authors’ willingness to use these tools all increasing.

Therefore, we present writing- and editing-related recommendations for authors, publishers/journals, and editors—based on our survey data and a year’s worth of our own experimenting with AI tools—to help all involved prepare for what appears to be an inevitable AI-integrated future.

…For Authors

At present, we would not advise the use of AI tools for either writing or editing without a thorough review by a human before manuscript submission. We elaborate on this below, in our advice for editors, but it is important to emphasize to all users, and especially those with non-fluent levels of English, that AI can sometimes produce serious errors that can be “hidden” within AI’s superficially accurate output.22

…For Publishers and Journals

While the current lack of clarity in the guidelines can affect authors of any linguistic background, including those for whom English is a primary language,23 it disproportionately affects multilingual authors because journals disproportionately ask that their manuscripts be copyedited for clarity, grammar, and style compliance before (re)submission.6

We recommend that guidelines on AI use disclosure must also explicitly refer to writing and editing as separate tasks. Another solution, proposed in a recent article24 by Avi Staiman, a leading voice on AI use in academia, is to clarify which routine AI-assisted tasks require disclosure and which do not. We are agnostic on whether AI-assisted copyediting needs to be disclosed, because currently not all journals or publishers require the disclosure of third-party human copyediting services, and not all authors choose to disclose their use of such services.

…For Research Copy Editors

We recommend that copy editors consider developing and offering a post-editing service to authors. In the translation industry, to post-edit is to “edit and correct machine translation output … to obtain a product comparable to a product obtained by human translation.”25 Post-editing has been reported to increase translator productivity, with the extent varying depending on factors such as linguist skill, machine translation output quality, familiarity with the tool, and expected final output quality.26 As AI tools improve further, copy editors may be able to adopt a similar model, passing on the efficiency gains to the clients by offering lower editing costs but offsetting income losses by appealing to a larger audience, who may have previously found 100% human services unaffordable. However, we strongly believe that author consent must lie at the heart of such services: editors must never use AI tools on author manuscripts without author consent, as also argued by Blackwell and Swenson-Wright.27

We do not anticipate the availability of AI tools to eliminate the need for human copy editors, as both language skills and domain expertise remain essential to evaluate the grammatical and technical accuracy of the output. This view is also reflected in what our respondents ranked as the most useful features of an AI editing tool in both survey rounds: “seeing revisions as tracked changes,” “explanations for changes made,” and “ability to submit to review by a human.” “Sometimes the outputs of AI tools are questionable. It is troublesome to verify the validity of the contents,” said one respondent, with another adding, “Human editing must still be the major and the last step to safeguard the quality and ethics of writing/editing.”

We do caution those in the editing profession to look out for the U-shaped employment polarization that seems to be occurring in the translation industry: an increase in low-paid and high-paid roles but a decrease in mid-paid roles.28

Disclosures and Acknowledgements

No AI tools were used in the drafting or editing of this manuscript. The authors thank AsiaEdit CEO Peter Day and COO Nick Case for suggesting and supporting the surveys on which this report is based.

References and Links

- Thorp HH. ChatGPT is fun, but not an author. Science. 2023;379:313 https://doi.org/10.1126/science.adg7879.

- Holliday I. About ChatGPT. [accessed April 1, 2024]. University of Hong Kong Teaching and Learning, 2023. https://tl.hku.hk/2023/02/about-chatgpt/.

- Thorp H, Vinson V. Change to policy on the use of generative AI and large language models. [accessed April 1, 2024]. Editor’s Blog, Science. 2023. https://www.science.org/content/blog-post/change-policy-use-generative-ai-and-large-language-models.

- University of Hong Kong. HKU introduces new policy to fully integrate GenAI in Teaching and Learning. August 3, 2023. [accessed April 1 2024]. https://www.hku.hk/press/press-releases/detail/26434.html.

- Yee CM. At The University of Hong Kong, a full embrace of generative AI shakes up academia. [accessed April 1, 2024]. Source Asia, Microsoft. 2023. https://news.microsoft.com/source/asia/features/at-the-university-of-hong-kong-a-full-embrace-of-generative-ai-shakes-up-academia/.

- Amano T, Ramírez-Castañeda V, Berdejo-Espinola V, Borokini I, Chowdhury S, Golivets M, González-Trujillo JD, Montaño-Centellas F, Paudel K, White RL, et al. The manifold costs of being a non-native English speaker in science. PLOS Biol. 2023;21:e3002184. https://doi.org/10.1371/journal.pbio.3002184.

- Chang A. Lost in translation: the underrecognized challenges of non-native postdocs in the English scientific wonderland. [accessed April 1, 2024]. The Academy Blog. The New York Academy of Sciences. https://www.nyas.org/ideas-insights/blog/lost-in-translation-the-underrecognized-challenges-of-non-native-postdocs-in-the-english-scientific-wonderland/.

- Giglio AD, Costa MUPD. The use of artificial intelligence to improve the scientific writing of non-native English speakers. Rev Assoc Med Bras (1992). 202318;69:e20230560. https://doi.org/10.1590/1806-9282.20230560.

- Abbas R, Hinz A, Smith C. ChatGPT in academia: how scholars integrate artificial intelligence into their daily work. [accessed April 1, 2024]. De Gruyter Conversations, 2023. https://blog.degruyter.com/chatgpt-in-academia-how-scholars-integrate-artificial-intelligence-into-their-daily-work/.

- Abbas R, Hinz A. Cautious but curious: AI adoption trends among scholars. [accessed April 1, 2024]. DeGruyter Report. 2023. https://blog.degruyter.com/wp-content/uploads/2023/10/De-Gruyter-Insights-Report-AI-Adoption-Trends-Among-Scholars.pdf.

- von Garrel J, Mayer J. Artificial intelligence in studies—use of ChatGPT and AI-based tools among students in Germany. Humanit Soc Sci Commun. 2023;10:799. https://doi.org/10.1057/s41599-023-02304-7.

- Information Technology Services Center. ChatGPT service. [accessed April 1, 2024]. Lingnan University. https://www.ln.edu.hk/itsc/services/learning-and-teaching-services/chatgpt.

- Chen L. University of Hong Kong allows artificial intelligence program ChatGPT for students, but strict monthly limit on questions imposed. [accessed April 1, 2024]. South China Morning Post. 2023. https://www.scmp.com/news/hong-kong/hong-kong-economy/article/3229874/university-hong-kong-allows-artificial-intelligence-program-chatgpt-students-strict-monthly-limit.

- Cheung W, Wong N. Hong Kong universities relent to rise of ChatGPT, AI tools for teaching and assignments, but keep eye out for plagiarism. The Star. 2023. https://www.thestar.com.my/tech/tech-news/2023/09/04/hong-kong-universities-relent-to-rise-of-chatgpt-ai-tools-for-teaching-and-assignments-but-keep-eye-out-for-plagiarism.

- Southern MG. Massive leak of ChatGPT credentials: over 100,000 accounts affected. [accessed April 1, 2024]. Search Engine Journal. 2023. https://www.searchenginejournal.com/massive-leak-of-chatgpt-credentials-over-100000-accounts-affected/489801/.

- Lomas N. ChatGPT-maker OpenAI accused of string of data protection breaches in GDPR complaint filed by privacy researcher. [accessed April 1, 2024]. TechCrunch. 2023. https://techcrunch.com/2023/08/30/chatgpt-maker-openai-accused-of-string-of-data-protection-breaches-in-gdpr-complaint-filed-by-privacy-researcher/.

- Summers-Miller B. Sharing information with ChatGPT creates privacy risks. [accessed April 1, 2024]. G2. 2023. https://research.g2.com/insights/chatgpt-privacy-risks.

- Tools such as ChatGPT threaten transparent science; here are our ground rules for their use. Nature. 2023;613:612. https://doi.org/10.1038/d41586-023-00191-1.

- Jackson J, Landis G, Baskin PK, Hadsell KA, English M. CSE guidance on machine learning and artificial intelligence tools. Sci Ed. 2023;46:72. https://doi.org/10.36591/SE-D-4602-07.

- Koller D, Beam A, Manrai A, Ashley E, Liu X, Gichoya J, Holmes C, Zou J, Dagan N, Wong TY, et al. Why we support and encourage the use of large language models in NEJM AI submissions. NEJM AI. 2023;1. https://doi.org/10.1056/AIe2300128.

- International Committee of Medical Journal Editors (ICMJE). Recommendations for the conduct, reporting, editing, and publication of scholarly work in medical journals. [accessed April 1, 2024]. ICMJE, 2024. https://www.icmje.org/icmje-recommendations.pdf.

- Kacena MA, Plotkin LI, Fehrenbacher JC. The use of artificial intelligence in writing scientific review articles. Curr Osteoporos Rep. 2024;22:115–121. https://doi.org/10.1007/s11914-023-00852-0.

- Heard S. Aren’t AI declarations for journals kind of silly? [accessed April 1, 2024]. Scientist Sees Squirrel. 2024. https://scientistseessquirrel.wordpress.com/2024/01/09/arent-ai-declarations-for-journals-kind-of-silly/.

- Staiman A. Dark matter: What’s missing from publishers’ policies on AI generative writing? [accessed April 1, 2024]. TL;DR, Digital Science, 2024. https://www.digital-science.com/tldr/article/dark-matter-whats-missing-from-publishers-policies-on-ai-generative-writing/.

- https://www.iso.org/obp/ui/en/#iso:std:iso:18587:ed-1:v1:en:term:3.1.4

- How fast can you post-edit machine translation? [accessed April 1, 2024]. Slator, 2022. https://slator.com/how-fast-can-you-post-edit-machine-translation/.

- Blackwell A, Swenson-Wright Z. Editing companies are stealing unpublished research to train their AI. [accessed April 1, 2024]. Times Higher Education, 2024. https://www.timeshighereducation.com/blog/editing-companies-are-stealing-unpublished-research-train-their-ai.

- Giustini D. The language industry, automation, and the price of finding the right words. [accessed April 1, 2024]. Futures of Work, 2022. https://futuresofwork.co.uk/2022/02/10/the-language-industry-automation-and-the-price-of-finding-the-right-words/

Felix Sebastian (https://orcid.org/0009-0008-2453-4097; https://www.linkedin.com/in/felixsebastian/) is the Managing Director at AsiaEdit (https://asiaedit.com/). Dr Rachel Baron (https://orcid.org/0009-0000-6502-9734; https://www.linkedin.com/in/rachel-baron-75bb1817/) is the Managing Editor for Social Sciences and the Head of Training & Development at AsiaEdit.

Opinions expressed are those of the authors and do not necessarily reflect the opinions or policies of the Council of Science Editors or the Editorial Board of Science Editor.