MODERATOR:

Teodoro Pulvirenti

Director of Publishing Integrity and Partner Services

American Chemical Society

SPEAKERS:

Sowmya Swaminathan

Head of Editorial Policy and Research Integrity

Nature Portfolio

Springer Nature

Bernd Pulverer

Head of Scientific Publications

EMBO

Catriona Fennell

Director of Publishing Services

Elsevier

Jana Christopher

Image Integrity Analyst

FEBS Press

REPORTER:

Tony Alves

Highwire Press

The session, “Recommendations for Handling Image Integrity Issue,” was Moderated by Teodoro Pulvirenti, Director of Publishing Integrity and Partner Services at the American Chemical Society, and started with a panel discussion on the work of the STM Standards and Technology Committee (STEC) Working Group in Image Alterations and Duplications.1 The panel included Sowmya Swaminathan, Head of Editorial Policy and Research Integrity at Springer Nature; Bernd Pulverer, Head of Scientific Publications, EMBO; and Catriona Fennell, Director of Publishing Services at Elsevier. The panel discussion was followed by a presentation on how to identify image integrity issues by Jana Christopher, Image Integrity Analyst at FEBS Press.

The panel discussion started with Fennell reviewing the history and objectives of the Working Group on Image Alterations and Duplications, an initiative launched by the STEC at STM. The working group was established to answer shared questions that many publishers had around image issues, and to identify tools that could be used to detect image manipulation before publication.

The working group created a list of recommendations for editors and journal staff handling image integrity issues based on the collective experience of the working group. The hope is that these guidelines will be complimentary with other guidelines such as those by the Committee on Publication Ethics (COPE)2 and the Cooperation & Liaison between Universities & Editors.3 The lack of a consistent, structured approach decreases editors’ confidence in how to deal with image issues and the lack of standard classification prevent the creation of good tools. It is hoped that these issues will be addressed by the recommendations.

A draft of the recommendation4 was posted on the OSF preprint server in September 2021. Comments were submitted by the community, and those comments were reviewed and incorporated into the final draft. That revised version5 was published in December 2021. It will be revised in the future as well.

Swaminathan addressed the structure of the recommendations and the principles that were defined by the working group. She stated that research is at the heart of everything we do, and researchers are responsible for the quality of the data and for the research. The first set of principles is focused on the researcher and are intended to be broadly framed—images should reflect the condition of the original data collection and should not be enhanced or edited. A core principle is transparency in how images were generated and what, if any, processing was used on the images. Any transformations should be described so that editors, reviewers, and readers can understand what was done to the image and why it was done. Journals may want to incorporate these principles in their guidelines.

A second part of the core principles is about editors and what they bring to the process. Pulverer discussed how journal editors should handle image manipulation issues. Editors must support the reliability of the scholarly literature. It is not up to the editor to sanction the author. Due diligence is important, but editors cannot be expected to detect all instances of image abuse. It is important to understand that not all image issues are meant to deceive; sometimes, the manipulation is an enhancement or a mistake. The recommendations also state that source data can be requested to help determine if manipulation is intended.

Sometimes readers question image integrity postpublication. Fennell pointed out that these concerns need to be addressed, as it is an important part of correcting the literature. Some editors are uncomfortable when the report is anonymous. Editors are asked to look at the merit of the comments, not the source of the comments. COPE has good flowcharts, and the COPE guidelines are the basis for the STM recommendations. The editor should assess the evidence, and it is up to the editor to decide if the author should address any questions. The interchange between editor and author could become part of the public record, including a letter to the editor and retraction notices. The editor should respect the confidentiality of the reporter and their anonymity should be maintained.

It is important to remember that the aim of the recommendation is not to police the author, but rather to work with the author and to provide a service to the community.

The working group also created a classification of image alterations. Swaminathan described the classifications as a structured decision-making framework, which is a device to help editors make consistent decisions about the impact that a problem image has on the integrity of a paper and determine the kind of editorial action that should be taken. The recommendation has three levels of increasing severity along with a description of each level, and a recommendation of the action that the editor should take. There is a constellation of issues that will affect action and outcome. For example, a level 1 issue (least severe) may be so pervasive that it affects confidence in the integrity of the findings, and so it will cause the editor to take a more severe action. The levels were based on three considerations: 1) type and severity of the anomaly; 2) whether it is a result of an error or is an intentional manipulation; and 3) impact of the problem image on the main conclusion of the studies.

Pulverer reminded the audience that the STM recommendation leans heavily on the COPE guidelines. Those guidelines provide a sequence for investigation and describe the nature of what actions should be taken. These include recommendations on interactions with authors and institutions. Actions might be very different depending on whether the problem is found prepublication or postpublication. There is more flexibility when dealing with issues during prepublication. In most cases, an author’s institution should be notified because they can be more effective and have a stronger position with the author. Editors are focused on the integrity of the scientific record and should not be perceived as out to get the author.

Swaminathan added the nuance that intent is not the final arbiter of outcome and the actions that are taken, it is the impact of the error on the research that is the important consideration. Does the article’s claim stand?

Pulverer made the point that they have not fully outlined corrective measures, and that currently, there are only two choices, either correcting a paper or retracting the entire paper. There needs to be a more nuanced set of tools that help correct the scientific record without the drastic measure of retraction.

Finally, the challenge of creating generalized recommendations was discussed by Fennell. She noted that different publishers have different policies and take different measures to correct the record. But because the working group was a good representative sample of publishers, finding common ground wasn’t too hard. A bigger challenge is that disciplines are very different, and it is hard to find solutions that work across disciplines. The recommendations are not exclusive to the life sciences, but that is where most of the data are available, and image analysis tools are trained on life science data. A lot of work needs to be done in other subject areas. The recommendations may need to be more general in the future.

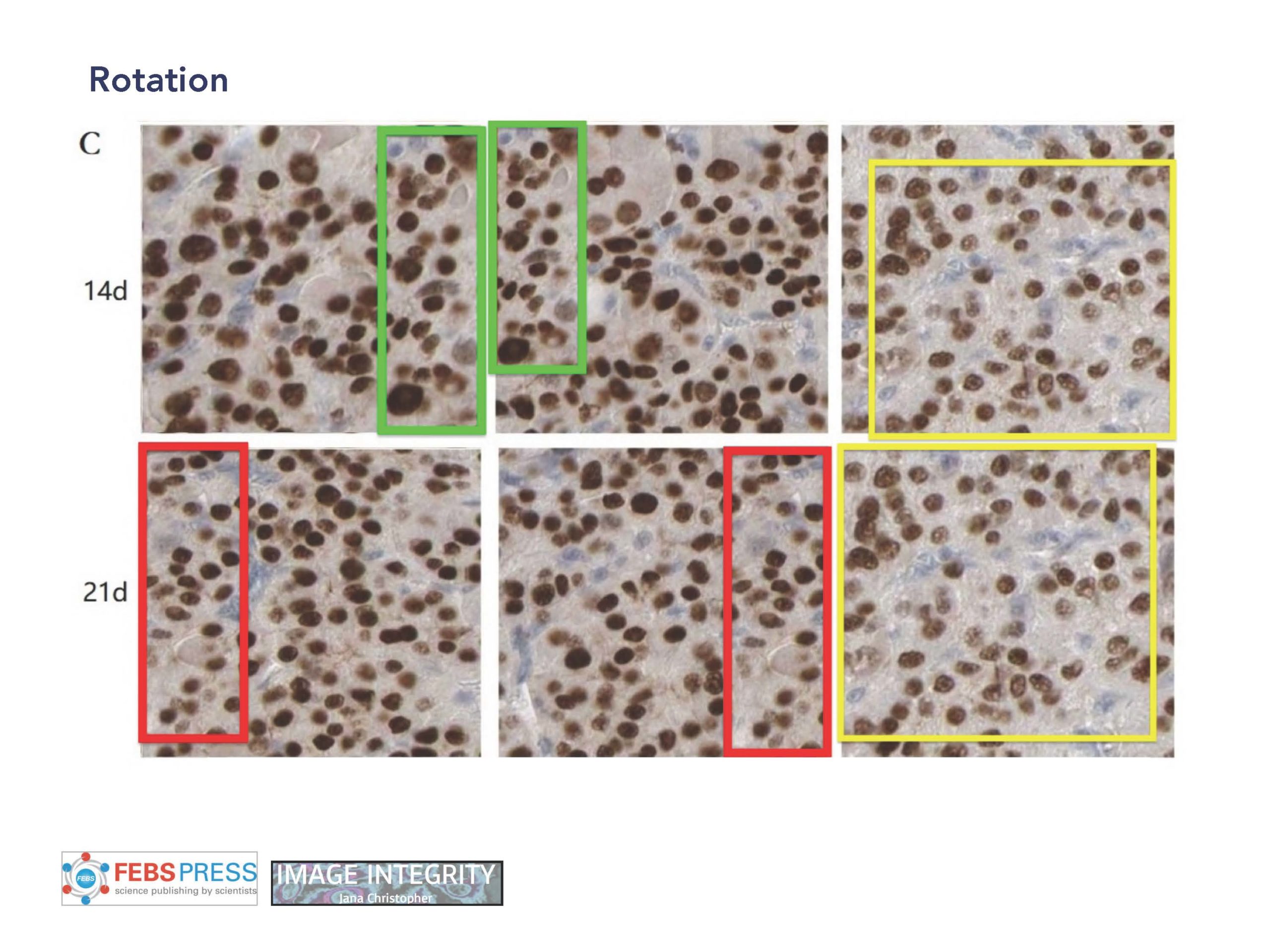

Jana Christopher, Image Integrity Analyst at FEBS Press, wrapped up the session with a deep dive into how to identify image integrity issues. There is a clear distinction between individual misconduct and systematic fabrication of research data (paper mills). Christopher works mostly in biochemistry, so most of the techniques are focused on western blots and gels, microscopy images, photos of animals and plants, plots, and graphs. She uses Photoshop to enhance images to find manipulation. She changes contrast, uses color tones, rotates panels, and looks at edges of images because that is often where overlaps will happen. Cloning of an image is hard to detect because there is no obvious manipulation. She says to always look for odd cuts in the image, as this means the author has assembled the image via copy and paste (Figure 1). You often need the raw data to detect these problems. There are automated screening tools that are good at picking up duplication, but they are not as good at detecting manipulation. The tools are improving, but people are still better at going deep into the forensic investigation.

Christopher then turned to a discussion of paper mills, organizations that fabricate studies that are then sold to researchers who are trying to publish. The data is often falsified, it is not clear if the experiments have actually been performed, and the same images may have been used multiple times to represent different experiments.

Focusing on how paper mills manipulate figures, they streamline the process of manipulation to make it cost effective by reusing images and labeling them differently in different papers. They use modified versions of images, and they create and invent images, which can be hard to detect. They reuse data sets and generate bar graphs from the data, using the same graphs in multiple papers. A lot of journals now ask for raw data, and they should clearly specify what is acceptable as raw data. Raw data should be uncropped, unprocessed images.

Christopher concluded with some recommendations for combating organized fraud:

- Increased vigilance and prepublication integrity checks

- Request raw data—be aware even raw data might be falsified

- Educate peer reviewers

- Faster retraction of fraudulent papers

- Share knowledge and tell-tale signs of paper mills

- Stronger incentives for responsible research practices like data sharing

- Collaborations between publishers

References and Links

- https://www.stm-assoc.org/standards-technology/working-group-on-image-alterations-and-duplications

- https://publicationethics.org/resources/flowcharts/image-manipulation-published-article

- https://researchintegrityjournal.biomedcentral.com/track/pdf/10.1186/s41073-021-00109-3.pdf

- https://osf.io/xp58v/

- https://osf.io/8j3az/?pid=xp58v