MODERATOR:

Chirag “Jay” Patel

Cactus Communications

Gustavo Monnerat

The Lancet

Chhavi Chauhan

Samast AI

American Society for Investigative Pathology, and Women in AI)

Annette Flanagin

JAMA and JAMA Network

Heather Goodell

American Heart Association

REPORTERS:

Andrea Rahkola

American Academy of Neurology

Tim Gray

JAMA Network

It could be argued that artificial intelligence (AI) and policy were the top two categories of conversation at the 2025 CSE Annual Meeting in Minneapolis, Minnesota; this session combined these categories for a look at the status quo of AI in science publishing. What once seemed a distant science publishing tool is now projected to soon be part of standard processing. Now is when the science publishing industry must work together to use AI to its full potential while implementing safeguards for research and peer review integrity.

Moderator Chirag “Jay” Patel introduced this session. Speakers Gustavo Monnerat, Chhavi Chauhan, Annette Flanagin, and Heather Goodell (Figure 1) covered AI application, moving through AI policies for authors, for peer review, and for meeting abstracts, and then theorizing on the future of AI in science publishing.

AI Polices for Authors

Gustavo Monnerat highlighted five key points from The Lancet’s guidelines for authors.1 First, AI should be used to improve readability, not replace conclusions or data analyses, and must be overseen by a human. Second, transparency should include acknowledgment of AI use, the model, the version, the prompt used, and the specific sections where it was applied to ensure reproducibility of the results. Third, AI use includes restrictions. AI should never process any unpublished research to create interpretive comments. Fourth, AI poses opportunities to improve inclusivity and protect research integrity. Fifth, AI policies and guidelines are evolving; The Lancet plans to update their guidance as a living document.

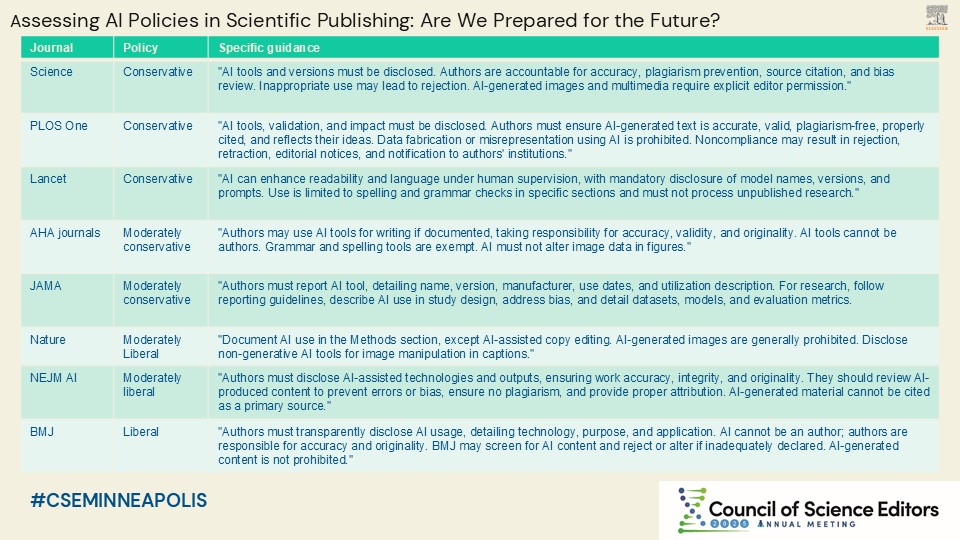

Monnerat discussed several examples of journals’ AI policies, from conservative (high detail) to liberal (less detailed) policies (Figure 2). Monnerat identified common elements among journals’ AI policies, including the requirement for authors to disclose use of AI, that authors have responsibility for accuracy, authors must understand risk of copyright concerns that come with AI use, and that authors must be aware of journal policies around the use of AI. In closing, Monnerat emphasized the intention to foster transparency.

AI Policies During Peer Review

Annette Flanagin referenced JAMA Network guidance on AI use during peer review.2 JAMA Network has been “playing a lot of catch-up” and released multiple guidance reports on AI. Their guidance extends the use of AI tools to peer review with an explicit reminder of the confidentiality of submitted papers and the peer-review process. Flanagin noted, “our confidentiality policy prohibits the entering of any part of the manuscript or your review into a chatbot, language model, or similar tool.” JAMA Network reminds reviewers at invitation and includes a question at review submission as to whether AI was used, with precise instructions on what must be reported about AI use. From July 2023 through March 2025, 0.7% of JAMA Network reviewers reported the use of AI when preparing their reviews. The most common uses of AI described were for language, grammar, and checking methodology; Flanagin pointed out that the latter raises the question of whether they entered something they should not have.

Flanagin summarized a range of peer review policies regarding use of AI by leading scientific journals and publishers, from conservative (no use) to liberal (not permitting use in nonpublic models that cannot guarantee confidentiality) (Figure 3).

AI Policies for Meeting Abstracts

Heather Goodell acknowledged what many scientific publishing professionals have experienced: “we’ve been burned by our meeting abstracts before.” For many journals, abstracts are published as a service to the conference and the field. Last year, during review of 8,500 submitted abstracts, the American Heart Association (AHA) used the Cactus Communications tool Paperpal Preflight for Editorial Desk for integrity checks. While only a few abstracts were flagged with a warning, there were additional issues with authors on several abstracts, as many as 30 or 40; most of these abstracts were systematic reviews or meta-analyses. AHA emailed every flagged abstract’s corresponding author and asked for authorship to be verified.

The AHA now has AI policies for meeting abstracts. They adopted what has been applied to the journals for research writing (i.e., spellcheck is okay, but you must disclose it), added a disclaimer to the abstracts, and implemented the same policy for reviewers (i.e., do not upload confidential content to a large language model). Goodell emphasized, “we do not want to penalize early career researchers, but we are responsible for the research being published in the journals.”

The Future: For Authors, Meetings, Peer Reviewers, and Scientific Publishing

Chhavi Chauhan reminded attendees, “no one has a crystal ball,” as she imagined the future of AI policies for authors, for meetings, and for peer review in scientific publishing. Chauhan asserted the need for living guidelines and for transparency with detailed reporting before discussing the potential of The AI Scientist and the generation of AI data and images. The AI Scientist generates hypotheses, performs experiments, and produces results; it can create full research articles and has produced a peer review system.3 Chauhan noted that The AI Scientist could be used to create great volumes of submissions, and with a low cost, may have utility when funding is scant. The generation of AI data and images may be used to fraudulently enrich data sets but can also be used in positive and progressive ways, such as accessibility initiatives. Use of AI tools for data analysis raises concerns, especially when there is no human check, that systematic reviews may become meaningless. Could scientific publishing lean into publishing and monetizing “dataset oceans” rather than research articles? There will be the question of data ownership. Creators now want to own their content and be rewarded. Chauhan asked, “will we think about giving rewards to authors or researchers? How would that change policies?”

For meetings and peer review, Chauhan posited a rise in AI-assisted submissions, increased reviewer burden, and a need to rely on tools to check for AI use. Submissions that look similar may become more common, and ownership/attribution will need to be carefully considered. It is time for scientific publishing to ethically integrate AI tools, not only to defend integrity but to assist with the most strenuous aspects of scientific review. Human review will always be necessary, but with the struggle to find statistical editors, AI could be used for a first pass at statistical review. AI may also be able to check citations to determine appropriate attribution, reducing the burden of long reference lists. Ultimately, Chauhan sees AI as an opportunity for the scientific publishing community to come together, agree on a baseline of AI policies, share use cases of AI, and think critically on the policies that should be instituted.

Session Q&A

Six questions were raised. To the first question of whether early-career researchers using AI Scientist to construct and submit a paper based on nonsense could be detected, panelist Annette Flanagin responded, “I’m not convinced we wouldn’t know.” The human touch on articles, discussion of submissions among editors, and the expertise of peer reviewers have continued importance. Experts know context better than internet-scraping AI. To the second question of whether the speakers expected any changes in lenient policies for peer reviewers in the case of articles that were already published as preprints, the speakers recognized the value of preprints, as demonstrated during the COVID-19 pandemic, and expressed hope that the next generation of peer review systems will have AI built in to assist reviewers and editors. To the third question asking the point of peer review if authors can use AI to complete the same peer review themselves, the speakers emphasized that good peer review evaluates novelty and uniqueness. To the fourth question on how implementing AI in peer review could be a prompt to evaluate what peer review is, the speakers reemphasized the importance of human touch in that a human will be needed to review AI reviews. To the fifth question about how policy around research based on statistical models will be affected by AI, the speakers acknowledged the need for the full dataset for an AI review to be effective, that a human will need to check the results of an AI review, and that there is great potential in AI being used to compare a manuscript with the study protocol and preregistration. To the final question of the value, the speakers reiterated that questions of whether something is truly important will always require a human editor to answer.

References and Links

- Bagenal J, Biamis C, Boillot M, Brierly R, Chew M, Dehnel T, Frankish H, Grainger E, Pope J, Prowse J, et al. Generative AI: ensuring transparency and emphasising human intelligence and accountability. Lancet. 2024;404:2142–2143. https://doi.org/10.1016/S0140-6736(24)02615-1.

- Flanagin A, Kendall-Taylor J, Bibbins-Domingo K. Guidance for authors, peer reviewers, and editors on use of AI, language models, and chatbots. JAMA. 2023;330:702–703. https://doi.org/10.1001/jama.2023.12500

- Sakana AI. The AI Scientist: towards fully automated open-ended scientific discovery. [accessed August 14, 2025]. https://sakana.ai/ai-scientist/.