This follow-up session to 2024’s Researcher to Reader (R2R) workshop continued from where the previous year’s initiative ended: charting a course for the accelerated evolution of the peer review process in scholarly publishing. Peer Review Innovations: What are Strategies for Implementing Solutions Across Scholarly Communications? brought together a group of invested individuals from across the industry including publishers, funders, technologists, librarians, and research integrity experts. The aim this time around was to move beyond identifying challenges to instead focus on actionable solutions that could be started by the attendees. With peer review under increasing strain due to rising submission volumes, reviewer shortages, and concerns about integrity, the discussion centered on practical strategies to improve efficiency, fairness, and transparency.

Lessons from the 2024 Workshop

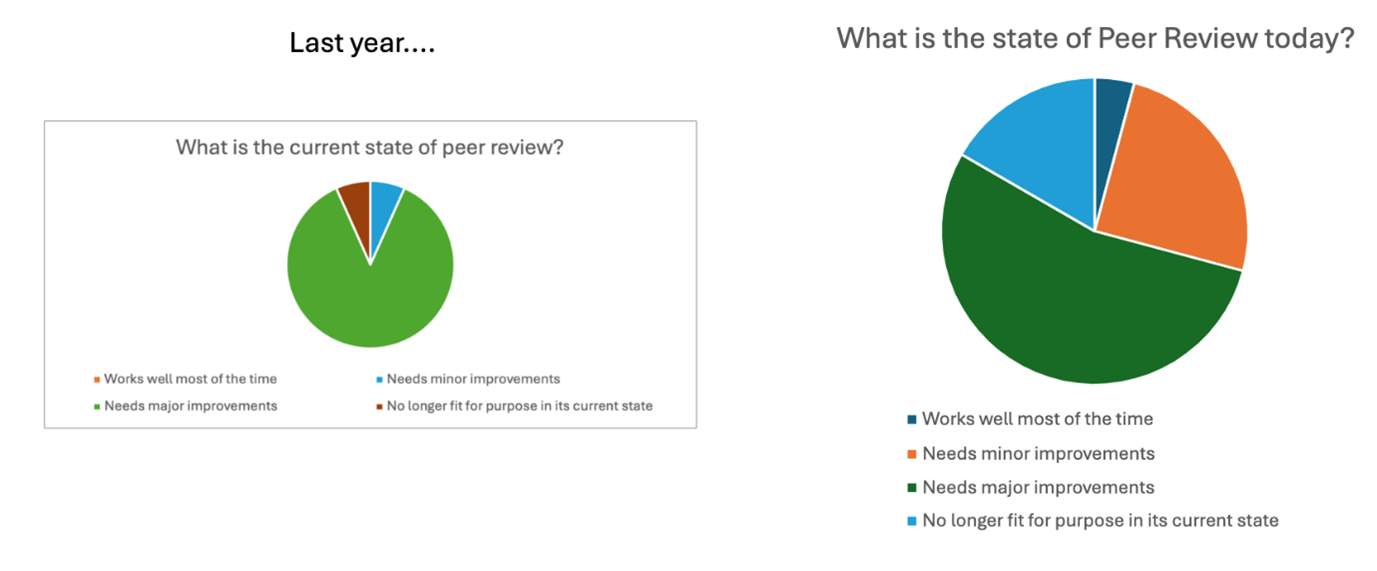

The lessons learned from the previous workshop helped to shape the framework of the 2025 discussions. Twelve months ago, we explored the root causes of peer review’s most pressing problems. First, we established that there was indeed a looming crisis in peer review. 93% of attendees voted that peer review needed major improvements (revisions?) and that the current workflows were both under strain and incentivizing malpractice. Some people were worried about artificial intelligence (AI)-generated fake research, others about identity fraud among reviewers, lack of training for peer review, and overburdened editorial teams.

However, there was also consensus that peer review remains an essential pillar in upholding the integrity of scientific research. Open peer review and AI-assisted reviewer selection were seen as promising, but widespread adoption and standardization were cited as being necessary to maximize their impact.1

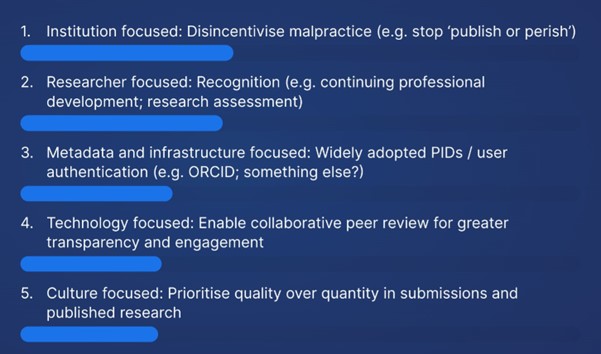

The attendees identified the below challenges and themes to focus on for innovation:

- Disincentivizing malpractice. How can we prevent unethical behavior in peer review?

- Professional recognition for peer reviewers. What systems can ensure reviewers receive appropriate credit for their work?

- Adoption of persistent identifiers (PIDs). How can we enhance identity verification in peer review?

- Technology in peer review. What role should AI and automation play in improving efficiency?

- Quality vs quantity. How can we balance the increasing volume of submissions with maintaining rigorous review standards?

The 2025 workshop set out to build on these insights and move on from talking about problems to concrete implementation strategies to solve them.

2025 Workshop Session 1: Identifying Challenges and Opportunities

The first session opened with a broad discussion on whether the challenges identified in 2024 (Figure 1) were still relevant, and if new problems had emerged. Overall, it was agreed that they were still relevant, with any new challenges falling into existing issues.

We repeated the poll about the current state of peer review, with the results in Figure 2.

While it was interesting to see the number of attendees who voted that peer review needed major improvements, the percentage who voted that it is no longer fit for purpose increased.

Attendees then broke into small groups to discuss each of the challenges and their root causes, the barrier these challenges create, before moving on to a more positive discussion to identify successes and existing strategies in each of the areas that could be expanded. Groups were moved around during the discussion to maximize perspectives brought to each of the challenges discussed. Several themes emerged:

- Reviewer identity fraud remains a real, persistent problem. Some participants pointed to fake reviewer accounts created to manipulate journal decisions, while others noted that a lack of multifactor authentication in submission systems makes it easy for fraudulent reviews to slip through and game the system.

- AI’s role in peer review sparked debate. Some participants saw AI tools as essential for streamlining administrative tasks, while others worried about biases in AI-generated reviewer recommendations and the ethical implications of AI-assisted manuscript screening.

- The sheer volume of submissions continues to strain the system. Participants questioned whether all research should go through traditional peer review, or if alternative models, such as community-driven postpublication review, could help alleviate pressure on journals.

- Professional recognition for peer review is not administered consistently and is not always effectively integrated into systems that matter to the peer reviewer, for example, funding and career development. The variance in recognition schemes, training, regional approaches, and lack of accountability for reviews leads to variance in quality and subjectivity.

2025 Workshop Session 2: Designing Innovative Solutions

After a short recap of Session 1, participants were tasked with thinking of bold, practical strategies to tackle the identified challenges. Each table worked on a different area of peer review innovation, focusing on immediate actions, medium-term strategies, and “blue-sky” ideas.

Key Solutions

Creating a professional governing body for peer reviewers, which would establish industry-wide ethics guidelines, offer certification programs, and provide accreditation for high-quality reviewers.

Implementing multifactor authentication for reviewers, building on and enhancing existing PIDs such as ORCID to reduce fraudulent reviews and improve identity verification. It is impossible to open a bank account (or crypto account) without some kind of background check involving bills, passports, and other proof of your identity. Within the financial sector, this is known as Know Your Customer (KYC). It minimizes the risk of fraud and identity theft and seems to be something the majority of the world has grown to live with, if perhaps not love. Why not have the same protocols to prevent fraud and identity theft—or spoofing—in the academic world? Is the value of the scientific corpus worth preserving this way?

Although there is an STM Task and Finish Group looking at this,2 the sense in our room was that this was essential. Why are we leaving the most important quality control checks to volunteers who, for the most part, are strangers to us—simply an email address in most cases. Most people felt that ORCID would be the ideal vehicle through which this could happen, but although ORCID is developing protocols to identify individuals through community efforts, it is not an identity verification system. How this will play out is still an open question it seems.

Building a peer review registry, where reviews travel with papers across journals, reducing redundant reviews and streamlining the process for both authors and editors. Portable peer review has long been touted as a way to streamline peer review processes, but for various reasons has not taken off yet. Standardized peer reviewing has a lot of attractions from an author and reviewer point of view, but every journal has its own foibles and ways of doing things and would not be able or willing to use a standardized report. However, this should be possible within a publishing house for cascade purposes across journal portfolios.

Shifting from “full-paper” peer review to targeted reviews, where reviewers evaluate specific sections (e.g., methodology, results), rather than entire manuscripts, helping to reduce workload. There was talk about AI being able to do simple checks on a manuscript around metadata, references, protocols, etc., and leaving the results and discussion sections to the reviewer. Or maybe, as we do with statistical review now, have several experts look at specific parts of the paper. There was even talk about “do we need to review every paper?”

Enhancing professional recognition for reviewers by integrating peer review activity into funding and career assessments and offering tangible benefits such as article processing charge discounts, professional badges, and faster publication timelines for active reviewers. The low visibility of what used to be Publons was a source of regret for some, but there are newer players who are actively recognizing peer review as a service that needs its own rewards. ResearchHub, for instance, rewards reviewers in its platform with special crypto tokens, $RSC, that can be spent in other ways on their platform or withdrawn as currency. In the absence of a large cross-publisher scheme to mirror this, the larger publishers could certainly consider implementing something similar across their own platforms. Leader boards and gamification could turn reviewing into something fun, while at the same time offering real benefits to those who contribute the most.

Although there was some back-and-forth on how to implement solutions, the problems and the need to address each one, achieved a level of consensus that was unexpected.

Each group presented their ideas, and participants voted on the most impactful solutions, refining them into actionable implementation plans for the final session.

2025 Workshop Session 3: Creating a Roadmap for Action

The final session focused on turning the best ideas into real-world initiatives. Groups outlined specific steps, stakeholders, and timelines needed to bring these innovations to life. The resulting roadmap included:

- Establishing a Publication Ethics & Evaluation Regulatory (PEER) body to standardize peer review practices, standards, and training programs within the next 12–24 months. This would include setting a mission and vision, membership strategy, developing guidelines, legal and ethical considerations, and forming an executive board.

- Launching a UK-based pilot program for peer reviewer accreditation, with international expansion in later phases. This could form part of the regulatory body posed above. The accreditation would include core quality indicators. This idea could go as far as placing the onus on the authors to secure reviews from reviewers on the register and then submitting their paper with the reviews.

- Collaborating with STM to integrate peer review authentication into their ongoing researcher identity initiatives.3

- Developing a third-party platform for shared reviewer pools, reducing the burden on individual journals, and increasing reviewer efficiency. Again, this could form part of the regulatory body and reviewer register suggested above.

- Piloting a targeted peer review model, selecting a few journals to experiment with reviewing only critical sections of manuscripts instead of full papers.

- Participants left the session energized, with commitments from several attendees to carry these projects forward beyond R2R. The focus here was on what each of us in the room could do to start the ball rolling on each initiative.

Where Do We Go from Here?

The closing discussion reinforced that the scholarly community is ready for change—but systemic challenges require collaboration between publishers, institutions, funders, and researchers. Several next steps were identified:

- Engaging key stakeholders, including STM, the European Association of Science Editors (EASE), Committee on Publication Ethics (COPE), Future of Research Communication and e-Scholarship (FORCE11), ORCID and institutional research offices, to support pilot projects.

- Conducting industry-wide surveys to gauge reviewer sentiment and identify further pain points.

- Tracking the success of the PEER initiative and accreditation pilots, ensuring they provide tangible benefits to reviewers and institutions alike.

One key takeaway was that peer review reform cannot happen in isolation. Real progress will require coordination across the ecosystem, sustained funding, and buy-in from researchers themselves.

This workshop at R2R 2025 successfully built upon the discussions from 2024, turning ideas into real plans. If the next year sees meaningful action from journals, funders, and institutions, peer review could soon be on a path toward a more transparent, efficient, and rewarding future.

Acknowledgements

The authors would like to thank all of the workshop participants for their wholehearted contributions to the conversations, debates, and outputs. We are also extremely grateful to Mark Carden, Jayne Marks, and the Researcher to Reader 2025 Conference organizing committee for the opportunity to develop and deliver this workshop.

References and Links

- Alves T, De Boer J, Ellingham A, Hay E, Leonard C. Peer review innovations: insights and ideas from the Researcher to Reader 2024 Workshop. Sci Ed. 2024;47:56–59. https://doi.org/10.36591/SE-4702-04

- https://stm-assoc.org/new-stm-report-trusted-identity-in-academic-publishing/

- https://stm-assoc.org/what-we-do/strategic-areas/standards-technology/researcher-id-tfg/

Tony Alves (https://orcid.org/0000-0001-7054-1732) is with HighWire Press; Jason De Boer (https://orcid.org/0009-0002-6940-644X) is with De Boer Consultancy and Kriyadocs; Alice Ellingham (https://orcid.org/0000-0002-5439-7993) and Elizabeth Hay (https://orcid.org/0000-0001-6499-5519) are with Editorial Office Limited, and Christopher Leonard (https://orcid.org/0000-0001-5203-6986) is with Cactus Communications.

Opinions expressed are those of the authors and do not necessarily reflect the opinions or policies of the Council of Science Editors or the Editorial Board of Science Editor.